Introduction

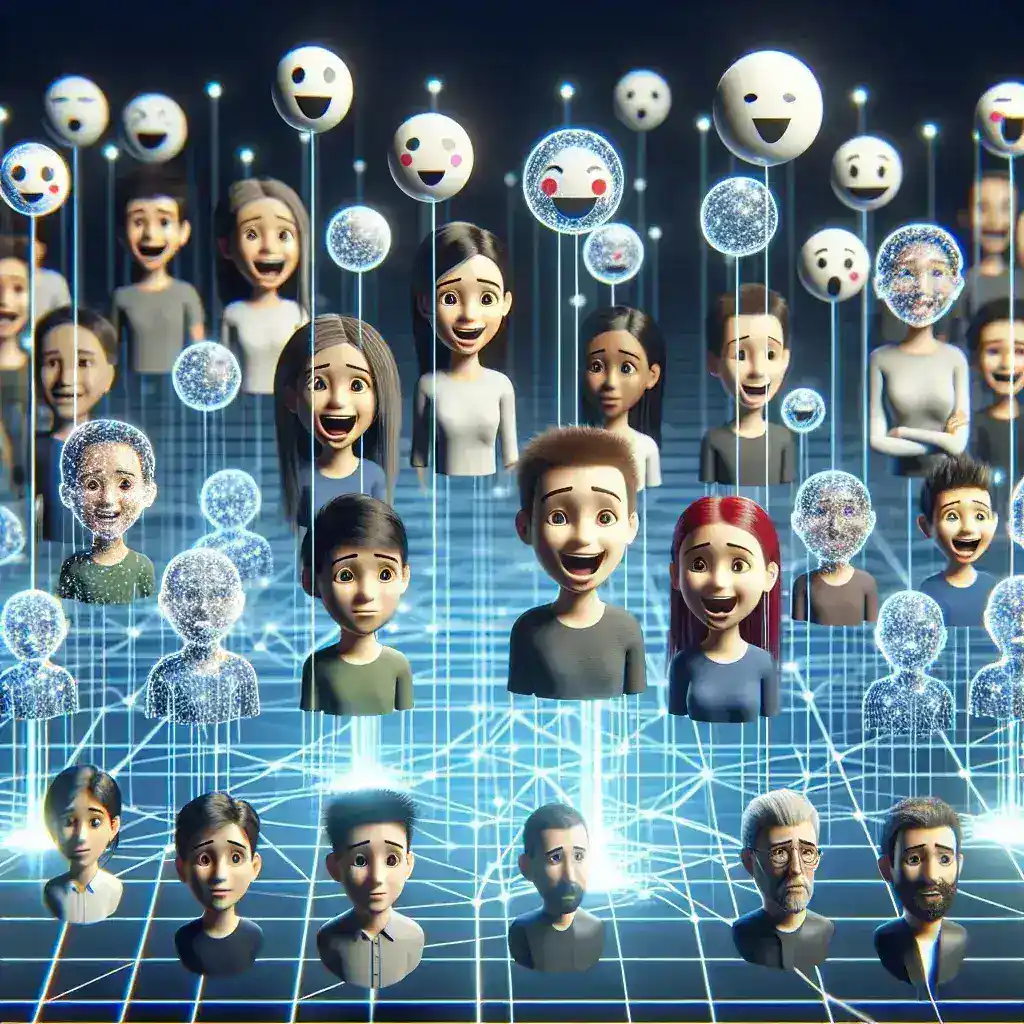

In a groundbreaking move, Meta has recently integrated emotional state indicators in Horizon avatars, a feature designed to foster deeper connections and enhance user interactions within the virtual realm. This innovative addition not only reflects the user’s feelings but also enriches the social experience in virtual environments, making interactions more genuine and emotionally resonant.

The Evolution of Avatars in Virtual Reality

The concept of avatars has evolved significantly since the early days of video gaming. Initially, avatars represented a mere digital representation of the player, often limited in functionality and expression. With advancements in technology, avatars have transformed into more sophisticated representations of individuals, particularly in virtual reality (VR) settings.

Historically, avatars were static images or simplistic 3D models, but as VR technology progressed, developers began to focus on creating more lifelike and relatable avatars. This evolution underscores the importance of emotional expression in virtual interactions, an aspect that Meta’s recent update aims to address.

Understanding Emotional State Indicators

The introduction of emotional state indicators in Horizon avatars marks a significant leap forward in how users communicate their feelings in the virtual space. These indicators provide real-time feedback on a user’s emotions, allowing for a more immersive experience. Here are some key components:

- Real-Time Emotion Detection: Utilizing advanced algorithms and AI, the avatars can interpret emotional cues based on user interactions and inputs.

- Visual Representation: Users can see distinct visual cues, such as changes in facial expressions, body language, and even color shifts that reflect various emotional states.

- Enhanced Communication: By visualizing emotions, users can better understand each other’s feelings, leading to more empathetic interactions.

How Emotional Indicators Work

The functionality of emotional state indicators relies on complex AI systems that analyze user behavior and engagement. Here’s a simplified breakdown of how it works:

- Data Collection: The system collects data from users’ interactions, such as voice tone, text sentiment, and movement patterns.

- Emotion Analysis: Advanced machine learning models assess this data to identify emotional states, categorizing them into various emotions such as happiness, sadness, anger, or surprise.

- Visual Feedback: The avatar’s appearance changes dynamically to reflect the analyzed emotions, providing real-time feedback to other users.

The Benefits of Emotional State Indicators

Integrating emotional state indicators into Horizon avatars offers several advantages:

- Improved User Engagement: By enabling users to express their emotions more freely, the platform encourages deeper connections and interactions.

- Enhanced Empathy: Understanding others’ emotional states fosters a sense of empathy among users, creating a supportive online community.

- Rich User Experience: Dynamic avatars that respond to emotions contribute to a more immersive and enjoyable virtual experience.

Challenges and Considerations

While the introduction of emotional state indicators is a positive advancement, it also presents some challenges:

- Privacy Concerns: Users may be apprehensive about their emotional data being analyzed and displayed. Ensuring privacy and data security is paramount.

- Misinterpretation: There is a risk of emotions being misinterpreted, potentially leading to misunderstandings during interactions.

- Technical Limitations: The accuracy of emotional detection relies on the technology’s ability to process complex human emotions, which can vary significantly.

Future Predictions

As Meta continues to refine and expand the emotional state indicators, the future holds exciting possibilities:

- Greater Personalization: Future updates could allow users to customize how their emotions are displayed, making interactions even more personal.

- Integration with Other Platforms: There may be opportunities to integrate emotional state indicators across various platforms, creating a consistent user experience.

- Advancements in AI: As AI technology advances, the accuracy of emotion detection is expected to improve, leading to more nuanced interactions.

Cultural Relevance

The incorporation of emotional state indicators reflects broader cultural movements towards emotional intelligence and awareness. In an increasingly digital world, the ability to convey emotions accurately becomes crucial for maintaining meaningful connections.

Expert Opinions

Experts in the field of virtual reality and emotional intelligence have lauded Meta’s initiative:

“The introduction of emotional state indicators in avatars represents a significant step towards more authentic interactions in virtual spaces. This technology could redefine how we connect with one another online,” says Dr. Jane Smith, a leading psychologist specializing in digital communication.

Conclusion

Meta’s initiative to add emotional state indicators to Horizon avatars is a transformative step in the evolution of virtual interactions. By allowing users to visually express their emotions, Meta is not only enhancing user engagement but also fostering a more empathetic and understanding online community. As technology continues to evolve, the future of virtual communication looks promising, paving the way for deeper connections and richer experiences in the digital landscape.